By David Godfrey-Smith, Boston Review, June 3, 2013

|

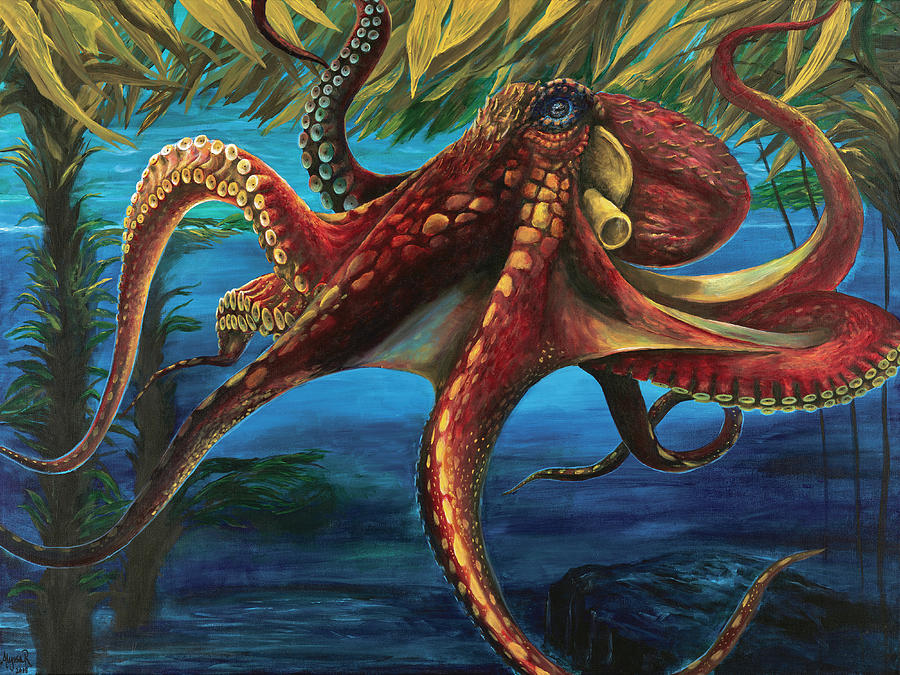

If octopuses did not exist, it would be necessary to invent them. I don’t know if we could manage this, so it’s as well that we don’t have to. As we explore the relations between mind, body, evolution, and experience, nothing stretches our thinking the way an octopus does.

In a famous 1974 paper, the philosopher Thomas Nagel asked: What is it like to be a bat? He asked this in part to challenge materialism, the view that everything that goes on in our universe comprises physical processes and nothing more. A materialist view of the mind, Nagel said, cannot even begin to give an explanation of the subjective side of our mental lives, an account of what it feels like to have thoughts and experiences. Nagel chose bats as his example because they are not so simple that we doubt they have experiences at all, but they are, he said, “a fundamentally alien form of life.”

Bats certainly live lives different from our own, but evolutionarily speaking they are our close cousins, fellow mammals with nervous systems built on a similar plan. If we want to think about something more truly alien, the octopus is ideal. Octopuses are distant from us in evolutionary terms, have a nervous system of very different design, and bodies with no bones and little fixed shape at all. What is it like to be an octopus? The question is intrinsically interesting and, beyond that, provides a good way to chip away at the problem Nagel raised for a materialist understanding of the mind.

*. *. *

How do we approach questions about “what it’s like” to be something or someone? One way of asking these questions makes them impossible to answer regardless of what minds might be made of. In this interpretation, to ask what it’s like to be a bat or an octopus is to ask for a description, given from a third-person point of view, that encapsulates the animal’s experience itself. But having an experience will always be different from having a description of it. This will be true if we are biochemical machines and true also if there is a soul-like extra ingredient in the world. A gap between a first-person and a third-person point of view arises either way.

Descriptions are not completely powerless, though, in helping us get a grip on what the experience of another might be like. What a description can do, often very effectively, is prompt memories and guide the imagination—it can elicit memories of experiences that one has actually had and guide the construction of variations on these memories. Whenever one person describes an important experience to another, we rely on this sort of use of memory and imagination. It is more difficult if someone or something cannot talk, cannot offer a usable description in their own words. Then if we want to get a sense of what their experience might feel like, we must draw on information about their other forms of behavior and about how their senses and nervous systems work. If what is going on in them can be mapped onto what is going on in us when we have an experience that we know firsthand, we can say something about what an experience is like for them. Doing this does rely on the assumption that there is a systematic relation between how things feel and what goes on in the nervous system—just as listening to what someone says requires the assumption that real experiences lies behind her words.

The biologist Richard Dawkins offered a reply to Nagel’s challenge about bats in his 1986 book The Blind Watchmaker. One of the main differences between bats and ourselves, emphasized by Nagel, is bats’ use of sonar, sound pulses, for navigation. Nagel said that this was unlike any sense that we possess, and we cannot reach bat experience by imagining ourselves to have a more elaborate form of hearing. Dawkins replied that the use of sound in both human hearing and bat sonar is an incidental matter. Instead, using sonar as a bat would feel similar to the way seeing feels for us. We should not imagine sonar as upgraded hearing, but as modified seeing.

Dawkins based this claim on what sonar does for a bat. An animal that uses sonar constructs an internal model of the location of objects in space on the basis of stimuli from its environment. The information made available by sonar is not exactly the same as that made available by vision, and the resulting internal models will certainly differ, but, Dawkins argues, the feel of vision results from the way it enables you to make your way through the world, and that gives us some indication of what it feels like to navigate with sonar. This argument does not require that the same parts of the brain be used for each sense. Strikingly though, a 2011 brain-imaging study by Lore Thaler and her colleagues found that blind humans with some natural ability to echolocate using mouth-clicks were using parts of their brains normally dedicated to vision to process the clicks.

To work out what it might feel like to be another animal, we have to find some way to justify mappings between what goes on inside that animal and experiences that we can, through memory and imagination, partly conjure in ourselves. We do the same thing with humans who have different capacities and backgrounds from our own. Nagel accepted this claim about the human case but added that the more biologically distant the subject whose experiences we are inquiring into, “the less success one can expect with this enterprise.” I agree that the more distant the subject, the more difficult the project becomes. But who’s to say that success must remain elusive? On to the octopus.

*. *. *

An octopus has neurons, more or less like those of other animals, and many of them are organized into a brain. This brain evolved on an evolutionary path far removed from our own. All animals have common ancestors if we go back far enough in time, and the pattern of relatedness between different animal species takes the rough form of a tree, the “tree of life.” Our common ancestor with octopuses lies back near the beginning of the evolution of complex animals, perhaps 600 million years ago. That ancestor was a small, simple, marine animal—probably a flattened worm. Its many descendants include, on one branch, humans and the other animals with backbones (dolphins, bats, birds), and on another branch, a huge range of invertebrate animals, including the octopus.

The octopus’s evolutionary path from the ancestral worm is unusual among the invertebrates because it led, as our path did, to a large nervous system. A common octopus has around 500 million neurons. That is many fewer than we have, but it is in the same range as a dog’s brain, which has 600 million or so. The octopus, along with some of its cephalopod cousins, is an independent experiment in the evolution of a large nervous system, the only such experiment outside the vertebrates.

The result is an animal that is curious and a problem-solver. Some octopuses carry pairs of coconut half-shells around to reconstruct as spherical shelters. Octopuses can recognize (and take a disliking to) individual human keepers in aquariums. They learn the layout of their environment and hunt on long loops that take them reliably back to a den. Octopuses have eyes built on a “camera” design like ours, with a lens focusing an image. They also have sensitive chemical sensors in their suckers—they taste the world as they touch it. When watching their eyes, it is natural to think that perhaps octopuses are a bit like us, just with more arms and no bones. Like other animals, they use their senses to track what is going on around them and to guide action. Would being an octopus be so different from being a bat, or any other animal with fine-tuned senses and a complex nervous system?

Underneath the skin, though, octopuses have an organization that takes them even further from us than they appear on the outside. Invertebrates generally have less centralized, more “distributed” nervous systems than vertebrates such as us. Octopuses are mollusks (like oysters and clams), and their nervous systems are organized in part into ganglia, little knots of nerve cells, with links between the knots. Most mollusks do not have much of a central brain. Starting out from a molluskan layout of this kind, evolution increased the size of the octopus nervous system enormously. The outcome of this process was uncovered in the mid-twentieth century, especially by John Z. Young and Martin Wells, working at the Naples Zoological Station in Italy. They found a number of surprises, some of which had consequences for all of biology. In 1936 Young described nerve cells in squid that are vastly larger than those of other animals, so big that electrodes could be inserted directly into them to measure their electrical activity. Work on those neurons became the basis for our understanding of how all nerve cells function.

Young, Wells, and their colleagues found that the octopus nervous system has three main parts. One is a central brain, which is a squashing-together of many expanded ganglia. There are also two optic lobes, large structures directly behind each eye. But most of the neurons—about two-thirds of them—are not in the head at all, but in the arms themselves. The connections between the central brain and this more peripheral nervous system also seemed to these researchers to be quite slim. An octopus’s arms are packed with sensors responding to touch and chemistry, and the arms have enormous flexibility, able to bend in any direction at any point. So there is a lot going on in the arms, but the connections between arms and brain are apparently restricted to a narrow channel. From these anatomical facts and some experiments on behavior, the early researchers inferred that the arms have a good degree of independence from the central brain. They do their own sensing and their own responding. As Roger Hanlon and John Messenger summarized it in their 1996 book Cephalopod Behavior, the arms seemed “curiously divorced” from the brain, at least with respect to the control of basic motions.

This disrupts a first round of guesses about what it might be like to be an octopus. Working from our understanding of their senses and the way they live their lives, it’s natural to first imagine that the experience of an octopus is visually rich (though apparently in black-and-white) and augmented with elaborate chemical sensing. Everything touched by the arms is tasted. We can imagine something about what it might be like to live in this bright and tasty world. But then we realize that this line of thought might be fundamentally mistaken, as it assumes that an octopus is the same kind of psychological unit that a person is. It assumes that the locus of octopus experience is a psychological self, though perhaps a simple one, where the senses converge to generate a feeling of how the world is. This picture might be wrong because an octopus is not organized as we are. Vision certainly feeds into the central brain, but each arm also contains shorter arcs between sensing and action.

This organization of the animal’s control systems is the most difficult barrier to working out what octopus experience might be like. The best work I know of that bears on these issues is coming out of Benny Hochner’s laboratory at Hebrew University in Jerusalem. In a 2011 study from Hochner’s lab, Tamar Gutnick and her colleagues published a paper that looked at whether an octopus could guide a single arm along a complex maze-like path to a specific location to get food. The task was set up in such a way that the octopus could not merely let the arm’s sensors follow a chemical gradient to the food, as the arm had to leave the water at one point to reach the target location. But the maze walls were transparent and the target location could be seen. To solve the problem the octopus had to guide its arm through the maze with vision. Although it took a while, all but one of the octopuses in the experiment learned to get an arm through to the food. The study also noted, though, that when octopuses are doing well with this task, the arm finding the food does what looks like its own local exploration at various stages, crawling and feeling around. There may be a mixture of two forms of control here: central control of the arm’s general path and fine-tuning of the search by the arm itself. Another possibility is that, by means of attention of some kind, the octopus is exerting control over all the details of movements that might usually be more autonomous.

Suppose that the “mixed-control” option, which Hochner tends to favor as an interpretation, is right. What would octopus experience be like? A range of partial analogies can be drawn with the human case. I visited Hochner’s lab with another philosopher, Laura Franklin-Hall, who wondered: Would an octopus experience its arms more as parts of its environment than as straightforward parts of itself? The arms would not be experienced entirely as environment, because they can be centrally controlled to some extent—they are less “divorced” from the brain than earlier researchers suspected. But once an arm has been sent in a certain direction, to some extent it is on its own. An analogy might be drawn with actions such as blinking or breathing. These are activities that normally happen involuntarily, but through attention you can assert control over them. The analogy is imperfect because although breathing is normally involuntary, when you do intervene to do it voluntarily, the control can be very fine-grained. In that case, attention is used to take over what is normally an automatic process. In the octopus, if the mixed-control interpretation is right, central guidance of the movements is never complete, and the peripheral system always has its say. Expressed too anthropomorphically, you would send an arm out deliberately and hope the local fine-tuning goes right.

Action by an octopus, then, would mix elements that are usually distinct in animals like us. When we act, the border between self and environment is usually fairly clear. When we move an arm, the arm can be controlled both in its general path and in the details. You can then watch your arm move, but what you are watching are the consequences of choices, or perhaps of habits that are the remnants of earlier choices. Various other things in the environment are not under your direct control at all, though they can be moved indirectly by manipulating them with your limbs. Uncontrolled movement by an object around you is usually a sign that it is not part of you at all (with partial exceptions for knee-jerk reflexes and the like). If you were an octopus, these distinctions would be blurred. Your arms would move in a way that is a mix of the centrally and peripherally controlled. To some extent you would guide them, and to some extent you would just watch them go.

One might wonder whether the guided action seen in the Gutnick experiment is a normal behavior for an octopus or instead something entirely artificial. When searching for food, an octopus often puts all its arms around or under a rock and seems to just let them roam. If the experimental behavior is unusual, that would not make the work uninteresting. The fact that the octopus can solve the problem—can pull itself together in this way—would still be significant. However, the behaviors in the experiment may not be that unnatural in any case. I once saw an octopus searching for food at a boulder underneath which a large shark was resting. The octopus held its body well back and stretched one arm under the boulder, very long and straight, and seemed to watch its path closely.

There is also a more directly skeptical response to these ideas about octopus experience and the self-environment relationship. I’ve assumed up to this point that it makes sense to think that an octopus could have a feeling of agency, a feeling that tracks the difference between what it is controlling and what is merely happening. But perhaps this is so sophisticated a form of experience that it is beyond any non-human animal? Although animals do act, perhaps they cannot feel that they are doing so; the contrast between actions and other events would not be apparent to them.

For at least some animals, this is probably not true: they may indeed have awareness of a distinction between events they cause and those they do not. This has been the topic of a number of interesting recent experiments. In these studies chimps or monkeys first learn to play simplified video games, moving virtual objects by using a joystick, trackball, or another controller, trying to get certain objects to meet or collide and, in some cases, trying to get other objects to avoid collision. They then perform tasks of these kinds while another “distracter” object moves on the screen in similar ways to the object they are controlling. The objects then freeze and the chimps’ second task—the one the experiment is set up to study—is to indicate which object was moving under their control.

In a 2011 study by Takaaki Kaneko and Masaki Tomonaga, chimps did well on a task of this kind. They guided an object toward a moving target and then picked it out from a distracter whose motions were those of an object that had been guided by a chimp on an earlier trial. It’s reasonable to wonder if chimps are a special case here, but Justin Couchman has done a related series of experiments on rhesus monkeys. Couchman’s experiment had the monkeys doing a harder task than the chimps were faced with, and one of his four monkeys clearly mastered it.

Every report of this kind I have read raises interesting further puzzles. Kaneko and Tomonaga ran an additional experiment in which the chimps were controlling neither icon as it moved. Instead they were watching a recording of an entire earlier trial. Moving the trackball had no effect on any object. As expected, the chimps did better at choosing the right object when they were actually controlling it. But surprisingly, they did not do too badly—performed better than chance—even when neither object was under their control. How is this possible? What does it even mean to get the “right” answer when neither object is being controlled? The “right” object was the one that had been controlled in the earlier trial when it was recorded. The object being controlled at that time will tend to follow the target more closely than the object that had been a distracter in that trial. This difference in apparent goal-directedness might lead the chimps to be more inclined to choose that object. Given this, it is important that the chimps made better choices when they had real control of one of the objects.

Nothing like these experiments has been tried in octopuses, as far as I know, and a chimp or a monkey is a very different animal from an octopus. But the experiments certainly tell against the idea that awareness of agency is beyond all non-human animals.

Some philosophers working within a broadly materialist framework are opposed to asking questions about “what it’s like” to have a particular kind of mind. They regard this way of setting up the issues as misguided. I think these questions are good ones, as long as they are asked in a way that does not doom them to unanswerability from the start. The divide between first-person and third-person points of view is real regardless of what minds are made of. Knowing how an animal’s body and brain are put together does not put you into a state that is similar to what is going on inside that animal, so in that sense no description can tell you “what it’s like to be” that animal. Getting a sense of what it feels like to be another animal—bat, octopus, or next-door neighbor—must involve the use of memory and imagination to produce what we think might be faint analogues of that other animal’s experiences. This project can be guided by knowledge of how the animal is put together and how it lives its life. When the animal is as different from us as an octopus, the task is certainly difficult, but it is one worth undertaking. Doing so is part of the attempt to strike a balance between treating our minds as too private and mysterious to make scientific sense of at all, and treating them as less private and mysterious than they really are.

No comments:

Post a Comment