By Pew Research Center, November 20, 2018

What makes life meaningful? Answering such a big question might be challenging for many people. Even among researchers, there is little consensus about the best way to measure what brings human beings satisfaction and fulfillment. Traditional survey questions – with a prespecified set of response options – may not capture important sources of meaning.

To tackle this topic, Pew Research Center conducted two separate surveys in late 2017. The first included an open-ended question asking Americans to describe in their own words what makes their lives feel meaningful, fulfilling or satisfying. This approach gives respondents an opportunity to describe the myriad things they find meaningful, from careers, faith and family, to hobbies, pets, travel, music and being outdoors.

The second survey included a set of closed-ended (also known as forced-choice) questions asking Americans to rate how much meaning and fulfillment they draw from each of 15 possible sources identified by the research team. It also included a question asking which of these sources gives respondents the most meaning and fulfillment. This approach offers a limited series of options but provides a measure of the relative importance Americans place on various sources of meaning in their lives.

The second survey included a set of closed-ended (also known as forced-choice) questions asking Americans to rate how much meaning and fulfillment they draw from each of 15 possible sources identified by the research team. It also included a question asking which of these sources gives respondents the most meaning and fulfillment. This approach offers a limited series of options but provides a measure of the relative importance Americans place on various sources of meaning in their lives.

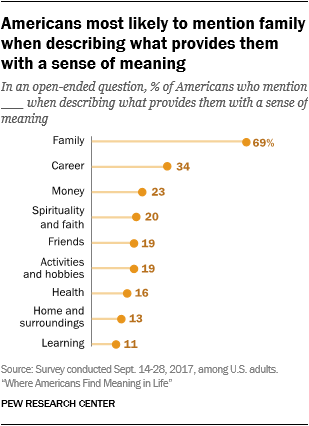

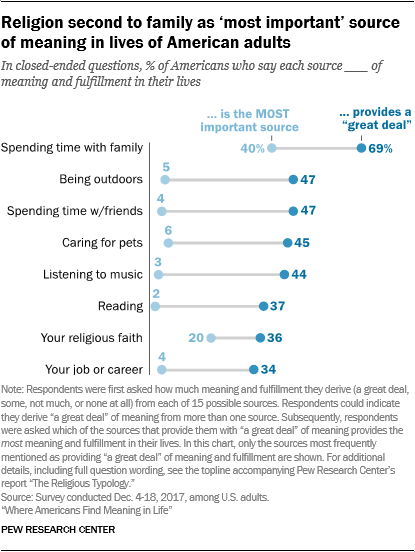

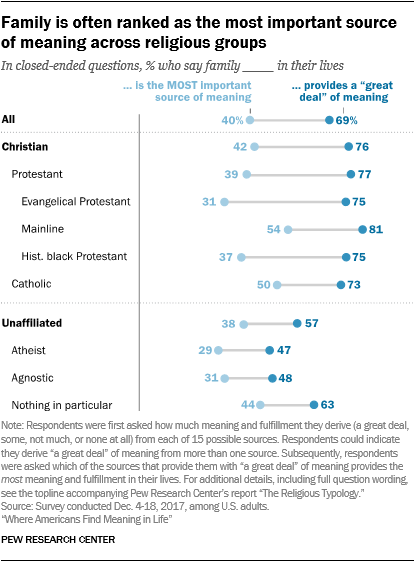

Across both surveys, the most popular answer is clear and consistent: Americans are most likely to mention family when asked what makes life meaningful in the open-ended question, and they are most likely to report that they find “a great deal” of meaning in spending time with family in the closed-ended question.

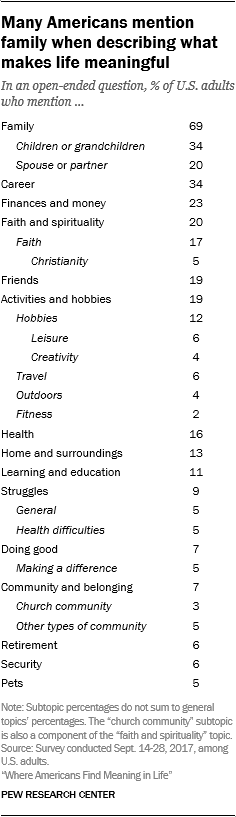

But after family, Americans mention a plethora of sources (in the open-ended question) from which they derive meaning and satisfaction: One-third bring up their career or job, nearly a quarter mention finances or money, and one-in-five cite their religious faith, friendships, or various hobbies and activities. Additional topics that are commonly mentioned include being in good health, living in a nice place, creative activities and learning or education. Many other topics also arose in the open-ended question, such as doing good and belonging to a group or community, but these were not as common.

But after family, Americans mention a plethora of sources (in the open-ended question) from which they derive meaning and satisfaction: One-third bring up their career or job, nearly a quarter mention finances or money, and one-in-five cite their religious faith, friendships, or various hobbies and activities. Additional topics that are commonly mentioned include being in good health, living in a nice place, creative activities and learning or education. Many other topics also arose in the open-ended question, such as doing good and belonging to a group or community, but these were not as common.

In the closed-ended question, the most commonly cited sources that provide Americans with “a great deal” of meaning and fulfillment (after family) include being outdoors, spending time with friends, caring for pets and listening to music. By this measure, religious faith ranks lower, on par with reading and careers. But among those who do find a great deal of meaning in their religious faith, more than half say it is the single most important source of meaning in their lives. Overall, 20% of Americans say religion is the most meaningful aspect of their lives, second only to the share who say this about family (40%).

People in a wide variety of social and demographic subgroups mention family as a key source of meaning and fulfillment. But there are some patterns in the sources of meaning that Americans cite, depending on their religion, socioeconomic status, race, politics and other factors.

People in a wide variety of social and demographic subgroups mention family as a key source of meaning and fulfillment. But there are some patterns in the sources of meaning that Americans cite, depending on their religion, socioeconomic status, race, politics and other factors.

Among the key findings from the surveys:

- Family is among the most popular topics across demographic groups. In response to the open-ended question, seven-in-ten Americans mention their family as a source of meaning and fulfillment, and a similar share say in the closed-ended question that family provides “a great deal” of meaning in their lives. While substantial shares in all major subgroups of Americans mention family, people who are married are more likely than are those who are not married to cite family as a key source of meaning.

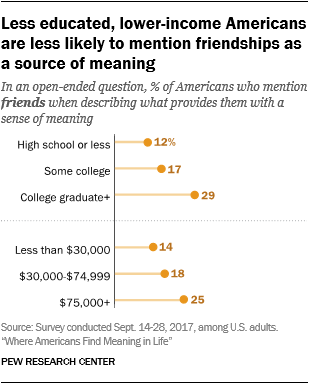

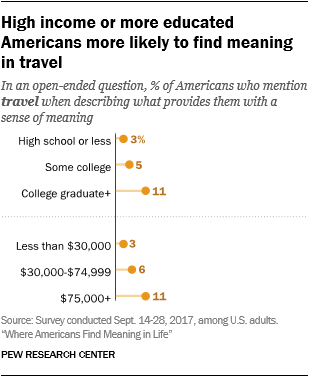

- Americans with high levels of household income and educational attainment are more likely to mention friendship, good health, stability and travel. A quarter of Americans who earn at least $75,000 a year mention their friends when asked to describe, in their own words, what makes life meaningful, compared with 14% of Americans who earn less than $30,000 each year. Similarly, 23% of higher-income U.S. adults mention being in good health, compared with 10% of lower-income Americans. And among those with a college degree, 11% mention travel and a sense of security as things that make their lives fulfilling, compared with 3% and 2%, respectively, who name these sources of meaning among those with a high school degree or less.

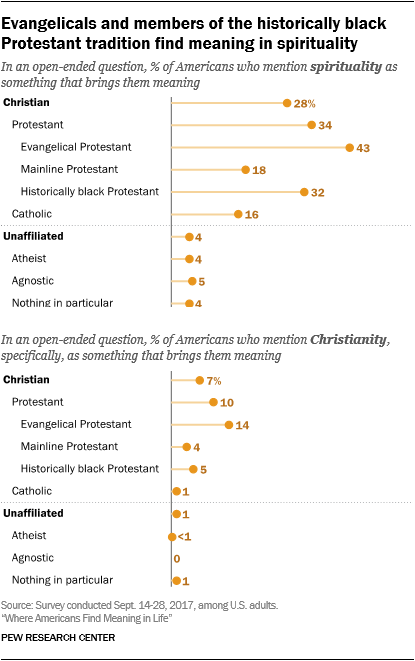

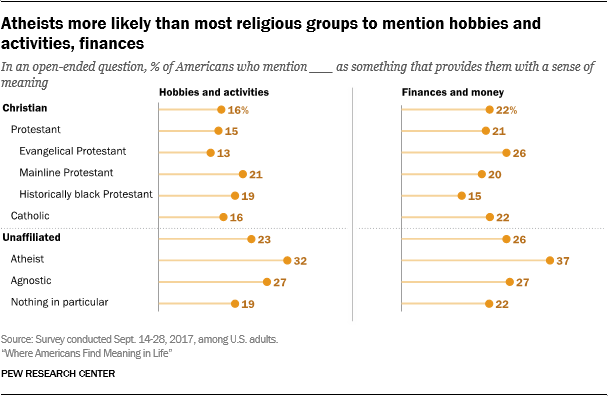

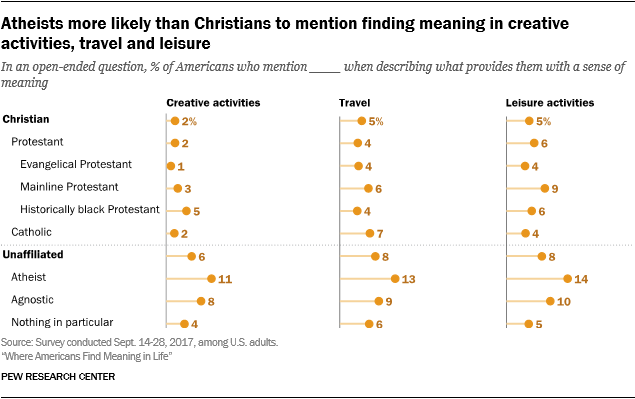

- Many evangelicals find meaning in faith, while atheists often find it in activities and finances. Spirituality and religious faith are particularly meaningful for evangelical Protestants, 43% of whom mention religion-related topics in the open-ended question. Among members of the historically black Protestant tradition, 32% mention faith and spirituality, as do 18% of mainline Protestants and 16% of Catholics. Evangelical Protestants’ focus on religious faith also emerges in the closed-ended survey: 65% say it provides “a great deal” of meaning in their lives, compared with 36% for the full sample. At the other end of the spectrum, atheists are more likely than Christians to mention finances (37%), and activities and hobbies (32%), including travel (13%), as things that make their lives meaningful. Atheists tend to have relatively high levels of education and income, but these patterns hold even when controlling for socioeconomic status.

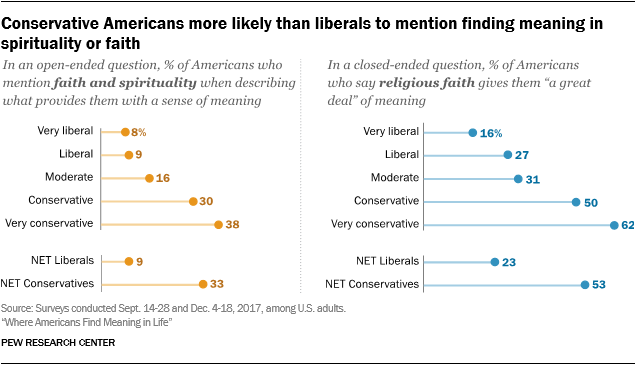

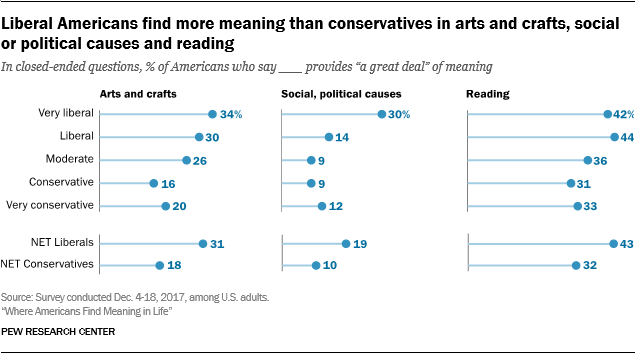

- Politically conservative Americans are more likely than liberals to find meaning in religion, while liberals find more meaning in creativity and causes than do conservatives. Spirituality and faith are commonly mentioned by very conservative Americans as imbuing their lives with meaning and fulfillment; 38% cite it in response to the open-ended question, compared with just 8% of very liberal Americans – a difference that holds even when controlling for religious affiliation. By contrast, the closed-ended question finds that very liberal Americans are especially likely to derive “a great deal” of meaning from arts or crafts (34%) and social and political causes (30%), compared with rates of 20% and 12% among very conservative Americans.

Measuring meaning

The closed-ended questions were included in a survey conducted Dec. 4 to 18, 2017, among 4,729 U.S. adults on Pew Research Center’s nationally representative American Trends Panel. Respondents were asked to indicate how much meaning and fulfillment they derive (“a great deal,” “some,” “not much” or “none at all”) from each of 15 possible sources. Additionally, respondents were asked to indicate which of the 15 items provides them with the most meaning and fulfillment. (For details on how the December survey was conducted, including full question wording, see Appendix B in “The Religious Typology.”)

The open-ended question was included in a survey conducted Sept. 14 to 28, 2017, among 4,867 U.S. adults on the American Trends Panel.1 The question asked, “We’re interested in exploring what it means to live a satisfying life. Please take a moment to reflect on your life and what makes it feel worthwhile – then answer the question below as thoughtfully as you can. What about your life do you currently find meaningful, fulfilling or satisfying? What keeps you going, and why?”

Those answering the question were free to write as much as they wanted. The average respondent wrote 41 words; some wrote hundreds of words. Respondents who gave longer responses tend to be highly educated and are more likely to be women. The patterns highlighted in this report hold up even when controlling (in multiple regression models) for the length of the responses as well as the demographic characteristics of respondents.

Researchers used natural language processing methods and human validation to identify topics in the open-ended responses. Put more simply, algorithms were used to analyze the responses for specific terms, and researchers verified the results to ensure accuracy. The goal was to classify whether each response mentions a given topic. Using a computational model of words that regularly appear together in the answers, researchers identified 30 different topics and used sets of keywords to measure each topic and label the responses. For example, answers that used words like “reading” and “exercise” were classified as mentioning “activities and hobbies.” Responses could be coded as mentioning multiple topics or none at all. For example, responses that mentioned “reading the Bible” were identified as both mentioning reading as an activity or hobby, and Christianity, faith and spirituality.

The open-ended responses include both broad topics and more specific subtopics within them. For example, respondents who specifically mention their husband, wife or romantic partner are coded as having cited a spouse or partner (20%). Those who respond with specific mention of their children or grandchildren (34%) are coded as such. And all respondents who mention any of these are also included in a broader category of those who mention family (69%), as are those who use words like “mom,” “sibling,” “niece” or simply “family.”

Similarly, responses that contain words like “Jesus” or “Christian” are included in the Christianity category (5%). The category of those who mention faith and spirituality (20% of all respondents) includes the 5% who mention Christianity as well as those who mention other religions or offer more general references with words like “God,” “religion,” “creator,” or simply “faith” or “spirituality.”

Full details about how the open-ended responses were coded are provided in the Methodology.

In many cases, the results of the open-ended and closed-ended questions resemble one another. For instance, 69% of respondents mention something having to do with family in their open-ended response to the question of what gives their life meaning and satisfaction, and an identical share (69%) say in the closed-ended question that they derive “a great deal” of meaning and fulfillment from family. Similarly, career is mentioned as a source of meaning and fulfillment by one-third of respondents in both the open-ended and the closed-ended questions.

In other cases, however, the two approaches to asking the question about what makes life meaningful yield very different results – at least at first glance. For example, in the open-ended question, just 5% of respondents mention something about pets or animals when describing what makes their lives meaningful. But in the closed-ended question, fully 45% of Americans say “caring for pets” provides them with “a great deal” of meaning and fulfillment.

These divergent results underscore the very different nature of the two kinds of questions. The results of the open-ended question suggest that when asked to describe, in their own words, what provides them with meaning and fulfillment and satisfaction in life, relatively few people think immediately of pets or caring for animals. Other things – including family, friends, career and religious faith – may come to mind much more quickly for most people.

However, when prompted explicitly in the closed-ended question to think about their pets, nearly half of Americans acknowledge that caring for their animals does, indeed, provide them with a great deal of meaning and fulfillment, and an additional three-in-ten say they get “some” meaning and fulfillment from their relationships with animals.

The surveys find similar patterns with respect to being outdoors and experiencing nature, fitness activities, and creative hobbies (such as arts and crafts or making music). These are all cited as providing a great deal of meaning by much larger shares of respondents when they are reminded about them in the closed-ended question than when they are asked to express, in their own words, what makes their lives meaningful and fulfilling.

Interpreting open-ended responses

Different groups of Americans find meaning in different places

Different groups of Americans mention different topics when asked what gives them meaning in life. Those with high income levels are more likely to mention friends and being in good health. Evangelical Protestants are more likely than Christians in general to say that they find a great deal of meaning in religion. Those who identify as politically liberal mention creative activities more than Americans overall, while conservatives are more likely to bring up faith, even after controlling for differences in their religious identification. For the most part, these and other patterns are observed in both the open-ended and closed-ended questions (where direct comparisons are possible).

Americans with higher household income, more education are most likely to mention friendships, good health, stability

There are several sources of meaning that are mentioned much more often by Americans with high incomes and levels of educational attainment than by those with lower incomes and less education. For instance, the open-ended question finds that higher levels of education and income are associated with an increased likelihood that a respondent will cite friendships and good health. Furthermore, high levels of education also are associated with mentioning a sense of security or stability and recreational activities as key sources of meaning and fulfillment.

There are several sources of meaning that are mentioned much more often by Americans with high incomes and levels of educational attainment than by those with lower incomes and less education. For instance, the open-ended question finds that higher levels of education and income are associated with an increased likelihood that a respondent will cite friendships and good health. Furthermore, high levels of education also are associated with mentioning a sense of security or stability and recreational activities as key sources of meaning and fulfillment.

Conversely, there are few topics that those with lower levels of income and education mention more often than others. Taken together, these findings suggest that those respondents who are socioeconomically advantaged may have resources – like free time to spend with friends or money to pursue opportunities to travel – that those who are less socioeconomically privileged simply do not have.

For example, those with a college degree are more likely than those with a high school diploma or less education to mention their friends (29% vs. 12%). There is a similar gap across income groups, even after accounting for education: A quarter of those who earn more than $75,000 a year mention friends, compared with 14% of those who have household incomes of less than $30,000 a year. One respondent in the highest income and education groups said: “Enjoying being with friends and family. What keeps me going is gourmet cooking for my friends and family.”

For example, those with a college degree are more likely than those with a high school diploma or less education to mention their friends (29% vs. 12%). There is a similar gap across income groups, even after accounting for education: A quarter of those who earn more than $75,000 a year mention friends, compared with 14% of those who have household incomes of less than $30,000 a year. One respondent in the highest income and education groups said: “Enjoying being with friends and family. What keeps me going is gourmet cooking for my friends and family.”

Education and income also are associated with whether respondents mention the health of themselves or loved ones. About a quarter of college graduates (24%) and those with household incomes of $75,000 or more (23%) mention being in good health when describing what gives them a sense of meaning. For instance, one respondent at the highest income and education levels said, “I am strong and fit, and while I am facing the physical changes that you face as you age, I am doing what can be done to defer them.” In contrast, those with incomes under $30,000 and those with no college experience are less likely to mention the topic (10% and 11%, respectively).

Education and income also are associated with whether respondents mention the health of themselves or loved ones. About a quarter of college graduates (24%) and those with household incomes of $75,000 or more (23%) mention being in good health when describing what gives them a sense of meaning. For instance, one respondent at the highest income and education levels said, “I am strong and fit, and while I am facing the physical changes that you face as you age, I am doing what can be done to defer them.” In contrast, those with incomes under $30,000 and those with no college experience are less likely to mention the topic (10% and 11%, respectively).

In addition to being less likely to mention being in good health, Americans whose household incomes are below $30,000 are somewhat more likely than those who earn $75,000 or more to mention health difficulties (coded as a different topic) as part of their response to the open-ended question about what makes their life meaningful. As one lower-income respondent put it, “It’s been more than difficult. Every year I’ve needed surgery on something. I’m still striving to do my best, to live my most productive life.”

Travel is also mentioned more frequently by those with high household incomes, as well as those with college degrees – 11% in both groups bring up traveling, exploring new places, or going on vacation, compared with 3% of those with no college experience or incomes under $30,000. As one low-income college-educated respondent put it, “I travel to see friends and family. I live in a beautiful country where I can see, hear and appreciate God’s creation.” On the other hand, one high-income person with no college experience echoed similar sentiments: “Traveling and seeing things I have never seen, enjoying simple things like the outdoors and warm weather.”

Those with higher incomes or college experience are also far more likely to mention their job or career when describing what gives them a sense of meaning. Nearly half – 48% – of both high-income and college-educated Americans mention their job. By contrast, just 24% of those without college experience and 22% of those making less than $30,000 cite their job or career when discussing how they find meaning and satisfaction in life.2

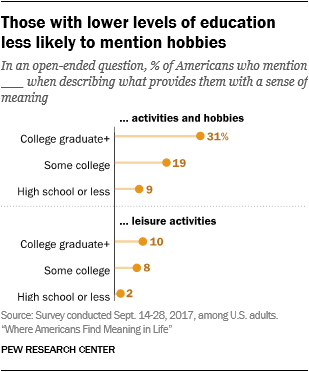

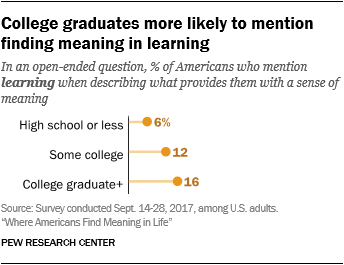

Levels of education – but not income – have a strong relationship with finding meaning in personal activities and hobbies, as well as other broad topics like feeling a sense of security and stability, and learning or education itself. College graduates are about three times as likely as Americans with less education to mention activities of any kind (31% among college graduates, compared with just 9% of those with no college experience). In particular, they more frequently cite leisure activities (10%, compared with 2% among those with a high school degree or less). Those with college or graduate degrees also are more likely to mention learning or education when describing what gives them a sense of meaning (16% vs. 6% of Americans with a high school degree or less). For example, one respondent with a college degree said, “I’m a gardener and use cover crops to increase soil tilth and fertility. This requires that I continue to explore and learn about the soil ecosystem.”

Levels of education – but not income – have a strong relationship with finding meaning in personal activities and hobbies, as well as other broad topics like feeling a sense of security and stability, and learning or education itself. College graduates are about three times as likely as Americans with less education to mention activities of any kind (31% among college graduates, compared with just 9% of those with no college experience). In particular, they more frequently cite leisure activities (10%, compared with 2% among those with a high school degree or less). Those with college or graduate degrees also are more likely to mention learning or education when describing what gives them a sense of meaning (16% vs. 6% of Americans with a high school degree or less). For example, one respondent with a college degree said, “I’m a gardener and use cover crops to increase soil tilth and fertility. This requires that I continue to explore and learn about the soil ecosystem.”

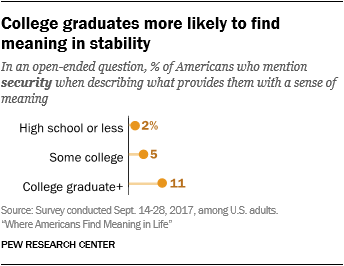

College-educated Americans also are more likely to mention having a sense of security or stability. One-in-ten of those with college degrees (11%) say they derive meaning from a sense of security, compared with just 2% of those without any college experience – regardless of their age or income. One respondent who has a postgraduate degree said, “Being safe and secure. Knowing that my family will be taken care of and having their needs met. My children having a future as bright as mine.”

College-educated Americans also are more likely to mention having a sense of security or stability. One-in-ten of those with college degrees (11%) say they derive meaning from a sense of security, compared with just 2% of those without any college experience – regardless of their age or income. One respondent who has a postgraduate degree said, “Being safe and secure. Knowing that my family will be taken care of and having their needs met. My children having a future as bright as mine.”

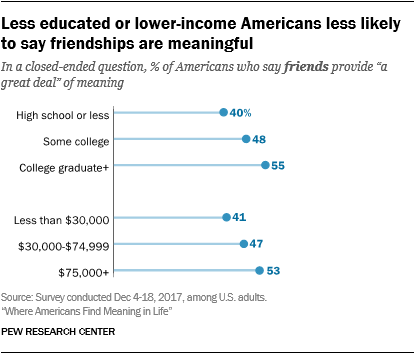

Like the open-ended question, the closed-ended questions find that those with more socioeconomic resources may have more opportunities for social activities than those who have fewer resources. For example, 55% of college graduates say spending time with friends provides them with “a great deal” of meaning and fulfillment, compared with 40% of those with a high school degree or less. Similarly, more than half (53%) of those with household incomes above $75,000 a year say that friends provide them with “a great deal” of meaning, while only 41% of those with incomes under $30,000 say the same.

Like the open-ended question, the closed-ended questions find that those with more socioeconomic resources may have more opportunities for social activities than those who have fewer resources. For example, 55% of college graduates say spending time with friends provides them with “a great deal” of meaning and fulfillment, compared with 40% of those with a high school degree or less. Similarly, more than half (53%) of those with household incomes above $75,000 a year say that friends provide them with “a great deal” of meaning, while only 41% of those with incomes under $30,000 say the same.

However, while education and income are associated with whether respondents mention their job or career in the open-ended question, these factors have no substantial effect on whether Americans say in the closed-ended question that they draw “a great deal” of meaning from their job or career. This suggests that those with higher incomes or more education do not necessarily find more meaning in their jobs, but that they are notably more likely to think of and bring up their careers when asked to describe in their own words what gives them a sense of meaning in their lives.

However, while education and income are associated with whether respondents mention their job or career in the open-ended question, these factors have no substantial effect on whether Americans say in the closed-ended question that they draw “a great deal” of meaning from their job or career. This suggests that those with higher incomes or more education do not necessarily find more meaning in their jobs, but that they are notably more likely to think of and bring up their careers when asked to describe in their own words what gives them a sense of meaning in their lives.

Where different racial groups find meaning

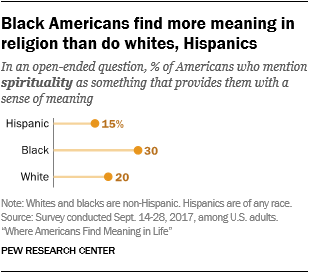

Regardless of their particular religious denomination, black Americans are more likely than others to mention faith and spirituality when describing (in the open-ended question) what gives them a sense of meaning.3 Fully three-in-ten black Americans (30%) mention spirituality and faith, compared with 20% of whites and 15% of Hispanics.

Regardless of their particular religious denomination, black Americans are more likely than others to mention faith and spirituality when describing (in the open-ended question) what gives them a sense of meaning.3 Fully three-in-ten black Americans (30%) mention spirituality and faith, compared with 20% of whites and 15% of Hispanics.

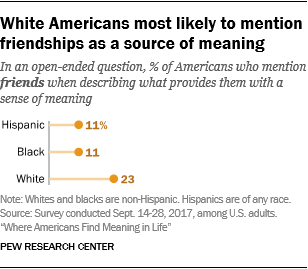

Race and ethnicity also are linked with a number of other sources of meaning, independent of socioeconomic factors. Specifically, white Americans are much more likely than black and Hispanic Americans to mention friends, stability and security, and a positive home environment as sources of meaning in their lives, even when controlling for education and income.

Race and ethnicity also are linked with a number of other sources of meaning, independent of socioeconomic factors. Specifically, white Americans are much more likely than black and Hispanic Americans to mention friends, stability and security, and a positive home environment as sources of meaning in their lives, even when controlling for education and income.

While 23% of white Americans mention friends when describing what gives their lives meaning, fewer black and Hispanic Americans do so (11% in each group). Furthermore, black and Hispanic Americans are much less likely than whites to mention enjoying where they live; 5% and 7% do so, compared with 16% of white Americans. And while 13% of blacks and 14% of Hispanics mention finances and money in some way, more white Americans (26%) mention the topic when describing what makes their lives meaningful.4

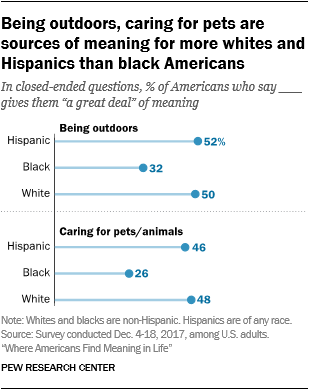

Analysis of the open-ended responses also shows that black Americans are less likely than others to mention being in good health (8%, compared with 15% of Hispanics and 18% of whites), and black Americans are notably less likely than whites to mention pets or animals or enjoying the outdoors and nature. While these topics were not brought up frequently in the open-ended responses by any group, hundreds of white respondents mentioned pets or animals and nature or the outdoors. In contrast, fewer than 10 black respondents mentioned either topic.

Analysis of the open-ended responses also shows that black Americans are less likely than others to mention being in good health (8%, compared with 15% of Hispanics and 18% of whites), and black Americans are notably less likely than whites to mention pets or animals or enjoying the outdoors and nature. While these topics were not brought up frequently in the open-ended responses by any group, hundreds of white respondents mentioned pets or animals and nature or the outdoors. In contrast, fewer than 10 black respondents mentioned either topic.

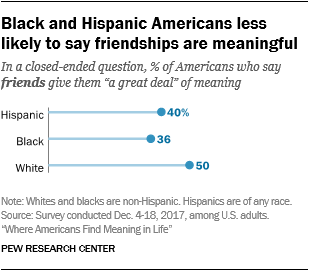

Similar patterns are found in the closed-ended questions, in which fully half of black Americans (52%) say they derive “a great deal” of meaning from their religion, compared with 37% of Hispanic Americans and a third of white Americans. Furthermore, a third of black Americans (32%) indicate that religion is their single most important source of meaning in life, compared with 18% of whites and 16% of Hispanics.

Similar patterns are found in the closed-ended questions, in which fully half of black Americans (52%) say they derive “a great deal” of meaning from their religion, compared with 37% of Hispanic Americans and a third of white Americans. Furthermore, a third of black Americans (32%) indicate that religion is their single most important source of meaning in life, compared with 18% of whites and 16% of Hispanics.

The closed-ended survey also finds that both black and Hispanic Americans are less likely than whites to say that spending time with friends provides them with “a great deal” of meaning. And whereas about half of Hispanics and whites say they get “a great deal” of meaning from pets or spending time in nature, just a quarter of black respondents say they get “a great deal” of meaning from pets (26%), and one-third say the same about nature (32%).

Among evangelicals, religion ranks as the most important source of meaning

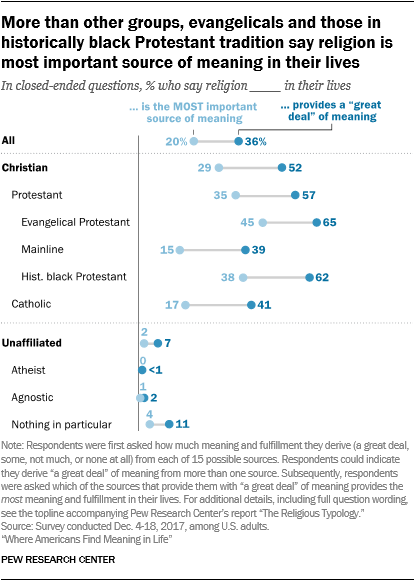

Religion is not the most commonly referenced source of meaning and fulfillment in either survey. In the open-ended question, one-in-five adults mention spirituality and religious faith when describing the things they find meaningful and fulfilling, which is on par with the share who mention friends and various activities or hobbies. And in the closed-ended question, 36% of those surveyed say they get “a great deal” of meaning and fulfillment from their religious faith, which is roughly equivalent to the share who draw the same level of meaning from reading or from their career.

But while religion is not a universal source from which Americans say they obtain “a great deal” of meaning, it is a highly salient source of fulfillment among those who select it. Indeed, among those who say (in the closed-ended survey) that religion provides them with “a great deal” of meaning, 55% say that religion is their most important source of meaning, while fewer (30%) say family provides them with the most meaning and fulfillment.

But while religion is not a universal source from which Americans say they obtain “a great deal” of meaning, it is a highly salient source of fulfillment among those who select it. Indeed, among those who say (in the closed-ended survey) that religion provides them with “a great deal” of meaning, 55% say that religion is their most important source of meaning, while fewer (30%) say family provides them with the most meaning and fulfillment.

For members of some religious traditions as well – particularly evangelical Protestants and members of the historically black Protestant tradition – faith matches or exceeds anything else as the top source of meaning and fulfillment. About two-thirds (65%) of evangelical Protestants say they find “a great deal” of meaning in their religious faith, including 45% who say religion is the most important source of meaning in their lives – higher than the share who say this about family (31%). A similar share of those in the historically black Protestant tradition (62%) also report that their religious faith provides them with “a great deal” of meaning, including 38% who say religion is the most important source of meaning in their lives.

For members of some religious traditions as well – particularly evangelical Protestants and members of the historically black Protestant tradition – faith matches or exceeds anything else as the top source of meaning and fulfillment. About two-thirds (65%) of evangelical Protestants say they find “a great deal” of meaning in their religious faith, including 45% who say religion is the most important source of meaning in their lives – higher than the share who say this about family (31%). A similar share of those in the historically black Protestant tradition (62%) also report that their religious faith provides them with “a great deal” of meaning, including 38% who say religion is the most important source of meaning in their lives.

By comparison, roughly four-in-ten Catholics (41%) and mainline Protestants (39%) say their religious faith provides them with “a great deal” of meaning and fulfillment. Also, in contrast with evangelical Protestants and members of the historically black Protestant tradition, fewer Catholics and mainline Protestants say religion is the most important source of meaning in their lives (17% and 15%, respectively). Instead, they are among the most likely of all religious groups to say that family provides them with the most meaning (50% and 54%, respectively).

In the open-ended question, too, evangelical Protestants are more likely than other Americans to mention faith and spirituality (and Christianity, specifically) when asked what makes life meaningful. Overall, 43% of evangelicals mention spirituality or faith in some way, including 14% who make specific references to their Christian faith. For example, one evangelical respondent said, “My belief in Jesus Christ and his teachings helps me greatly when things get really difficult.”

Spirituality is also a commonly mentioned topic among those in the historically black Protestant tradition, among whom 32% mention spirituality or faith as a source of meaning in their lives. Smaller shares of mainline Protestants (18%) and Catholics (16%) mention faith and spirituality as sources of meaning and fulfillment.

Spirituality is also a commonly mentioned topic among those in the historically black Protestant tradition, among whom 32% mention spirituality or faith as a source of meaning in their lives. Smaller shares of mainline Protestants (18%) and Catholics (16%) mention faith and spirituality as sources of meaning and fulfillment.

Not surprisingly, very few self-described atheists mention spiritual topics when asked what makes life meaningful. Instead, atheists are much more likely than others to mention finances or involvement in various kinds of activities. Indeed, roughly a third of atheists (37%) discuss finances in response to the open-ended question, compared with 26% among evangelical Protestants, 22% among Catholics, 20% among mainline Protestants and 15% among those in the historically black Protestant tradition. One atheist described finding meaning in “doing work and acting in a way that is meaningful to your beliefs. Being financially comfortable to be able to do things that make you happy.”

Atheists are especially likely to mention hobbies and activities in general – roughly a third (32%) of atheists do so. In particular, they are more likely than Americans overall to mention creative activities like painting and writing, leisure activities like playing games and watching movies, and traveling or exploring new places as sources of meaning and fulfillment in their lives. For example, one said, “I also find a lot of pleasure in trying new things, athletics, and exploration. I have the money and the flexible schedule to go on adventures and challenge myself physically.”

Conservative Americans more likely to find meaning in religion, while liberal Americans more likely to find meaning in creativity and social causes

Americans who identify as conservative or very conservative are more likely than others to say they find “a great deal” of meaning in their religious faith, while those who are liberal or very liberal are more likely than conservatives to say they find a great deal of meaning in arts and crafts and social or political causes.

Regardless of their religious affiliation, conservative or very conservative Americans are more likely than liberals to mention (in their open-ended responses) their spirituality or faith as a key source of meaning in their lives (33% vs 9%). As one conservative respondent said, “First, my life is rooted in God and the knowledge that He provides unconditional love and support regardless of what is happening in the world. With God at the center of my life, circumstances may change but love, peace, and joy remain.”

The closed-ended question echoes this pattern. Conservative U.S. adults are at least twice as likely as liberals to say religion provides them with “a great deal” of meaning (53% vs 23%). This is especially true of very conservative Americans: Six-in-ten (62%) say their religious faith provides them with “a great deal” of meaning, including 41% who say that it is their most important source of meaning. In contrast, just 16% of very liberal Americans say that they find “a great deal” of meaning in religion, including just 5% who say that it provides them with the most meaning in life. And fully half of very liberal Americans report that religion does not contribute at all to their sense of meaning.

Liberals, meanwhile, are more likely than conservatives to say they derive meaning and fulfillment from listening to music and arts and crafts. Fully half of liberals say they get “a great deal” of meaning from listening to music (52%), and three-in-ten (31%) say they get “a great deal” of meaning from arts and crafts. By comparison, 34% of conservatives derive “a great deal” of meaning from listening to music, and 18% say the same about arts and crafts. These results come from the closed-ended question, but as one self-identified liberal put it in responding to the open-ended question, “Art and love keep me going. I am in a relationship with the love of my life again, and he encourages me to follow my heart and pursue whatever art it is I want to follow at the time. I paint, draw, do photography, make jewelry, do paper crafts, small woodworking and wood burning, sew costumes and other clothing, blend spices, dry flowers, and sometimes do projects that might be called ‘fusion’ art, such as painting and adding pieces of paper crafts.”

Liberal Americans are also more likely than conservatives to say that social or political causes provide them with “a great deal” of meaning (19% vs. 10%). And among those identifying as “very liberal,” three-in-ten (30%) say they find a great deal of meaning in social or political causes, almost three times the rate seen in the general public. In the open-ended question, one very liberal respondent said they find meaning in “volunteering for causes I believe in like ending hunger and social justice issues,” among other activities. Liberals are also notably more likely to say that they find “a great deal” of meaning in reading: Four-in-ten say they do (43%), compared with 37% of Americans overall.

Younger Americans less likely to mention religion, but draw more meaning from learning than older Americans

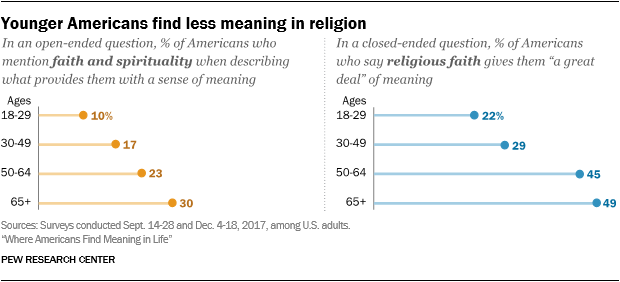

Just 10% of U.S. adults under age 30 mention spirituality, faith or God when describing (in the open-ended question) what affects their sense of meaning. By contrast, three-in-ten adults ages 65 and older mention religion when describing what makes their life meaningful and fulfilling.

The closed-ended question finds a similar relationship between age and finding meaning in religion: Roughly one-in-five adults under 30 (22%) report that religion provides them with “a great deal” of meaning, while fully a third (34%) say religion provides them with no meaning at all. By contrast, half of those ages 65 or older report that they draw “a great deal” of meaning from religion, and only 12% say they do not get any meaning and fulfillment from religion. Three-in-ten (29%) of those ages 65 or older say religion is the top source of meaning in their lives, while 10% of adults under 30 say the same.

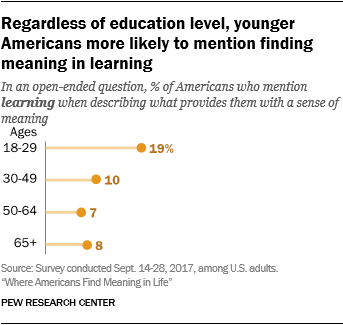

Meanwhile, U.S. adults under age 30 are more likely than older Americans to say (in the open-ended question) that they find meaning in learning or education (19% do so, compared with 9% among older Americans). This difference between younger and older adults is statistically significant even accounting for different levels of educational attainment between the groups, although it may be connected to the fact that a much higher share of young adults are current or recent full-time students.

Meanwhile, U.S. adults under age 30 are more likely than older Americans to say (in the open-ended question) that they find meaning in learning or education (19% do so, compared with 9% among older Americans). This difference between younger and older adults is statistically significant even accounting for different levels of educational attainment between the groups, although it may be connected to the fact that a much higher share of young adults are current or recent full-time students.

Married Americans more likely to mention family, religion

While family is a key source of meaning for Americans in many different demographic categories, there are some variations between subgroups. For example, women are somewhat more likely than men to say family provides a great deal of meaning in their lives.

While family is a key source of meaning for Americans in many different demographic categories, there are some variations between subgroups. For example, women are somewhat more likely than men to say family provides a great deal of meaning in their lives.

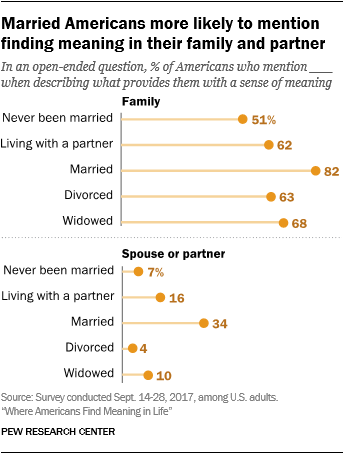

When asked in the open-ended question about what gives them a sense of meaning and satisfaction in life, married Americans are more likely than unmarried people to mention family (which includes specific references to one’s spouse or children). Four-in-five married people (82%) mention family as a source of meaning and fulfillment (including 34% who specifically mention their spouse or partner), compared with 59% of unmarried U.S. adults. Married Americans are also more likely to mention spirituality or faith; 23% do, compared with 13% of Americans that have never been married.

Similar patterns emerge in the closed-ended question. Married Americans are more likely than unmarried people to say that family provides them with “a great deal” of meaning (80% vs. 60%). And half (49%) say that it is their most important source of meaning, compared with 32% of unmarried Americans. Married people also are more likely than unmarried people to indicate that they find “a great deal” of meaning in religion (41% vs. 32%), and a quarter (24%) say religion is the most important source of meaning in their lives, compared with 16% among unmarried Americans. One married American explained that they find meaning in “association with family and friends. … The relationship I share with my wife. But most of all, my relationship with my Lord and Savior Jesus Christ.”

Acknowledgments

This report is a collaborative effort based on the input and analysis of the following individuals. Find related reports online at pewresearch.org/religion.

Primary Researchers

Patrick van Kessel, Senior Data Scientist

Adam Hughes, Computational Social Scientist

Gregory A. Smith, Associate Director of Research

Becka A. Alper, Research Associate

Research Team

Alan Cooperman, Director of Religion Research

Besheer Mohamed, Senior Researcher

Kiana Cox, Research Associate

Onyi Lam, Computational Social Scientist

Elizabeth Podrebarac Sciupac, Research Associate

Stefan Wojcik, Computational Social Scientist

Claire Gecewicz, Research Analyst

Dennis R. Quinn, Data Science Analyst

Emma Remy, Data Science Assistant

Research Methods

Courtney Kennedy, Director of Survey Research

Nick Bertoni, Panel Manager

Andrew Mercer, Senior Research Methodologist

Nicholas Hatley, Research Analyst

Arnold Lau, Research Analyst

Editorial and Graphic Design

Michael Lipka, Editorial Manager

Aleksandra Sandstrom, Copy Editor

Bill Webster, Information Graphics Designer

Communications and Web Publishing

Stacy Rosenberg, Associate Director, Digital

Travis Mitchell, Digital Producer

Anna Schiller, Communications Manager

Rachel Weisel, Communications Manager

Hannah Klein, Communications Associate

Claudia Deane, vice president of research at Pew Research Center, and Rich Morin, senior editor, also provided valuable guidance for this report.

Methodology

This report is based on two separate surveys conducted on Pew Research Center’s American Trends Panel. The ATP is a nationally representative panel of randomly selected U.S. adults recruited from landline and cellphone random-digit-dial surveys. Panelists participate via monthly self-administered web surveys. Panelists who do not have internet access are provided with a tablet and wireless internet connection.

The first of the two surveys analyzed in this report, which included an open-ended question about what makes life meaningful and fulfilling, was conducted in September 2017. Details about the survey and the methods used to code the open-ended responses are provided below.

The second survey, which included closed-ended questions about what makes life meaningful and fulfilling, was conducted in December 2017. The overall results of the December 2017 survey have been previously released (however, the analysis reported here is new), and methodological details, including question wording, are available in Pew Research Center’s report “The Religious Typology.”

Survey data collection – September 2017 survey

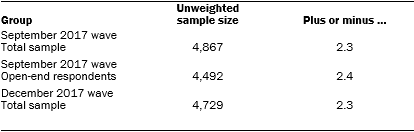

The open-ended question discussed in this report was included in an ATP survey conducted Sept. 14 to 28, 2017, among 4,867 respondents, including 4,492 respondents who provided a response to the open-ended question. The analyses reported here are based on the subset of participants who completed the open-ended question; the overall margin of sampling error for these 4,492 respondents is plus or minus 2.4 percentage points.5

Members of the American Trends Panel were recruited from several large, national landline and cellphone random-digit-dial surveys conducted in English and Spanish. At the end of each survey, respondents were invited to join the panel. The first group of panelists was recruited from the 2014 Political Polarization and Typology Survey, conducted Jan. 23 to March 16, 2014. Of the 10,013 adults interviewed, 9,809 were invited to take part in the panel and a total of 5,338 agreed to participate.6 The second group of panelists was recruited from the 2015 Pew Research Center Survey on Government, conducted Aug. 27 to Oct. 4, 2015. Of the 6,004 adults interviewed, all were invited to join the panel, and 2,976 agreed to participate.7 The third group of panelists was recruited from a survey conducted April 25 to June 4, 2017. Of the 5,012 adults interviewed in the survey or pretest, 3,905 were invited to take part in the panel, and a total of 1,628 agreed to participate.8

The ATP data were weighted in a multistep process that begins with a base weight, which incorporates the respondents’ original survey selection probability and the fact that in 2014 some respondents were subsampled for invitation to the panel. Next, an adjustment was made for the fact that the propensity to join the panel and remain an active panelist varied across different groups in the sample. The final step in the weighting uses an iterative technique that aligns the sample to population benchmarks on a number of dimensions. Gender, age, education, race, Hispanic origin and region parameters come from the U.S. Census Bureau’s 2016 American Community Survey. The county-level population density parameter (deciles) comes from the 2010 U.S. decennial census. The telephone service benchmark comes from the July-December 2016 National Health Interview Survey and is projected to 2017. The volunteerism benchmark comes from the 2015 Current Population Survey Volunteer Supplement. The party affiliation benchmark is the average of the three most recent Pew Research Center general public telephone surveys. The internet access benchmark comes from the 2017 ATP Panel Refresh Survey. Respondents who did not previously have internet access are treated as not having internet access for weighting purposes. Sampling errors and statistical tests of significance take into account the effect of weighting. Interviews are conducted in both English and Spanish, but the Hispanic sample in the American Trends Panel is predominantly U.S. born and English speaking.

The September 2017 wave had a response rate of 73% (4,867 responses among 6,696 individuals in the panel). Taking account of the combined, weighted response rate for the recruitment surveys (10.0%) and attrition of panel members who were removed at their request or for inactivity, the cumulative response rate for the wave is 2.5%.9

Identifying themes within open-ended responses

In the first survey, researchers asked the following question:

We’re interested in exploring what it means to live a satisfying life. Please take a moment to reflect on your life and what makes it feel worthwhile – then answer the question below as thoughtfully as you can.

What about your life do you currently find meaningful, fulfilling, or satisfying? What keeps you going, and why?

To avoid the risk of priming bias, the question was placed near the beginning of the survey, immediately following three introductory “ladder of life” questions that asked respondents to rate their lives on a scale of 1-10 in the past, present and future. Respondents wrote 41 words on average in answering the question about what makes life meaningful, fulfilling or satisfying. The shortest responses consisted of just a single word (66 responses in total; “family,” “work,” “God,” etc.) and the longest response totaled 939 words. Half of the panelists also received a randomly assigned expanded prompt that explicitly asked for detail – “Please give as much detail as you can” – those respondents wrote an additional 12 words on average.

Each response was coded as to whether it contained mentions of each of 30 topics or themes.10 Decisions about which themes to code for and which keywords to use as indicators were informed by a topic model. Responses were coded as having mentioned a topic if the response included any of several keywords determined by researchers to be indicators of the topic in question, or, in one case, if the model predicted that a response mentioned the topic. For instance, responses were coded as having mentioned travel if they included any of the following words or phrases: “travel,” “traveling,” “vacation,” “trip,” “adventure,” “visiting,” “new place,” “explore world” and “overseas.” Responses that do not include any of those words are coded as having not mentioned travel. (A complete list of the 30 themes coded in this analysis is provided in the appendix, along with full details about the keywords used as indictors for each topic.)

The remainder of this section provides additional details about how the text responses were processed and how the 30 topics coded in the analysis were decided upon.

Text processing

Prior to analysis, responses were cleaned using a series of standard text-processing steps: responses were broken down into whitespace-separated tokens, lowercased and lemmatized (reduced to their root form); punctuation was also removed. A series of 434 stop words were also removed: 316 common English filler words that hold little meaning (such as “the,” “a,” “and,” “but”), 24 words and abbreviations related to months (“June,” “July”), and 94 words from a custom list that was developed iteratively, mainly comprised of words contained in the survey prompt (which respondents sometimes reiterated; “satisfying,” “meaningful,” “fulfilling”) and other words that were uniquely common in the survey responses but not useful for analysis (“personally,” “especially,” “ultimately”). The following example shows how these steps affect the text:

Before: “I finally have a child. I have a wonderful husband and a job that allows me to have flexibility.”

After: “finally child wonderful husband job allows flexibility”

Responses were then broken down into “bags of words,” a common approach in natural language processing that involves converting the cleaned text of responses into sets of words and phrases. Researchers chose to represent responses in terms of single words, two-word phrases (bigrams), and three-word phrases (trigrams). For example:

Before: “finally child wonderful husband job allows flexibility”

After: “finally,” “child,” “wonderful,” “husband,” “job,” “allows,” “flexibility,” “finally child,” “child wonderful,” “wonderful husband,” “husband job,” “job allows,” “allows flexibility,” “finally child wonderful,” “child wonderful husband,” “wonderful husband job,” “husband job allows,” “job allows flexibility”

In addition to removing stop words, researchers also filtered out words and phrases that were found in fewer than five different responses and removed those that were found in more than 90% of all responses (extremely frequent words often do not capture meaningful variation).

At the end of this process, the remaining vocabulary consisted of 1,603 unigrams, 1,082 bigrams and 111 trigrams, and each document was flagged as containing each word or phrase, or not, using binary variables. These 2,796 flag variables were then passed into topic models, which attempted to identify clusters of words.

Developing codable topics and identifying associated keywords through topic models

After processing the text, researchers entered the responses into a variety of computational topic models.11 Two different semi-supervised topic models – a granular one with 110 topics, and smaller one with 55 topics – initially returned 165 possible themes, which at this stage were simply sets of words that tended to appear together within responses.12 Researchers reviewed a sample of responses containing each of the sets of words, and then iteratively refined the models by adding anchor words to direct the models toward more conceptually coherent themes, with the goal of being able to assign each theme a clear definition. Researchers also identified spurious words in the topics that actually referred to a different concept than what the topic was intended to measure, and flagged these as irrelevant words to exclude from the topics in subsequent iterations of the model.

To provide a concrete example, one topic that emerged during exploratory topic modeling included the words “grandchild,” “grand,” “child grandchild,” “grand child,” “child” and “Florida.” While these words were correlated with one another in the dataset, researchers determined that this topic’s main theme was grandchildren, and the word “Florida” was related to a different concept (those who mentioned living in Florida were also especially likely to mention grandchildren). Accordingly, subsequent iterations of the topic model were instructed to assign “Florida” to a different topic.

Researchers repeated these processes several times, and ultimately settled on a finite set of potentially codable themes or topics. For each of these potentially codable topics, the research team developed a codebook consisting of a specific definition for what the topic represented, and additional guidelines for which types of responses qualified and which did not. Next, researchers drew a random sample of 100 responses for each of the potentially codable topics. For each sample, researchers used the codebook to classify whether each response mentioned the topic or not, according to its definition.

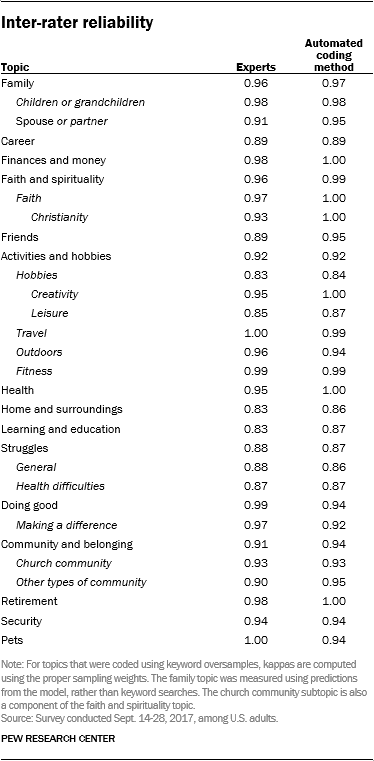

Two in-house coders completed each sample, with the goal of producing acceptable inter-rater reliability, which was defined as achieving 95% confidence that Cohen’s kappa (a common reliability measure) was above 0.70 (a common threshold for good agreement). Whether the codes for a topic’s sample managed to reach this threshold depended on two things: the reliability between the two coders in their coding of the sample of 100 documents, and the prevalence of the topic in the sample (that is, how often it appeared). Because of the small sample sizes and resulting uncertainty, the actual kappa values required to reach the 95% confidence cutoff were much higher than 0.70. Researchers were able to reach this threshold for many of the topics identified as potentially codable, identified some as clearly not reliably codable, and found that several topics showed acceptable reliability but failed to meet the 95% confidence threshold because they were relatively rare and the sample size was not large enough.

For the latter set of topics (those that showed acceptable reliability but failed to meet the 95% confidence threshold), researchers had to code additional documents to meet the confidence threshold. For some of these topics, researchers were able to accomplish this by expanding the existing random samples. However, this was not a viable approach for a few topics that appeared less frequently; for these, researchers used a technique called keyword oversampling to draw new samples. Rather than code another, much larger random sample for topics that were present in only a small fraction of documents, the lists of anchor words were used to draw new samples that were disproportionately comprised of documents that contained each of the topics being investigated: 50% with and 50% without. Based on the assumption that roughly half of the documents in these samples would contain the actual themes researchers were attempting to code, the expected Cohen’s kappa (based on the initial random samples for each topic) was used to estimate the number of documents that would be required for each of the new samples in order to successfully verify that reliability was above 0.70.13

After coding these samples and computing Cohen’s kappa values using sampling weights (which penalized false negatives that did not contain the oversampled anchor words), all of these topics were determined to be codable. While the topic models produced predictions about which responses mentioned particular topics, they also included a list of anchor words, consisting of the most prominent words in each topic, as well as additional words that were related to each topic and added manually by researchers. These keywords were used as an alternative way to measure whether responses mentioned a topic: By formatting the anchor words as keyword matching patterns (known as regular expressions), researchers could search through the text and identify any responses that used one or more of a topic’s keywords.

Next, researchers resolved their own disagreements for each of the topic samples proven to be reliably codable, resulting in a human-produced ground truth for each category. This baseline estimate of which responses mentioned particular topics could be compared against both the keyword-matching pattern for each topic and the predictions from the topic model. For almost all of the codable topics, researchers were able to successfully verify that an automated topic-modeling method could reliably emulate human coders. However, the topic model only outperformed keyword matching for one of the codable topics; for most topics, simply searching responses for any of the keywords among each topic’s most prominent words proved to have higher reliability. Accordingly, researchers decided to use keyword searches as the primary method of topic identification, rather than the anchored models. Only one topic (general references to family) was measured using the topic model predictions; all others were measured using anchor word regular expressions.

At this point, researchers re-examined more than a dozen topics that had been set aside during earlier coding due to poor reliability. Researchers refined the keywords for each of these topics and created a new codebook definition for each. Next, they drew keyword-weighted samples (rather than random samples), each sized according to prevalence of the topic during prior coding attempts. After coding, resolving disagreements and comparing the researcher benchmarks against the automated pattern matching, acceptable kappa values were achieved for most of these re-examined topics (for both human coder and human-pattern agreement).

Finally, researchers reviewed 200 random documents alongside the (now nearly final) topic codes and conducted an open-coding exercise intended to identify any additional prominent topics that were not being captured by the existing topics. Through this process, researchers identified one additional theme (helping others) that was added to the list of topics being coded.

This process initially resulted in 38 codable topics, some of which consisted entirely of component subtopics. Two of these 38 were used to capture references to family in general and faith and spirituality in general. These two were not analyzed on their own and another six were set aside because they did not appear frequently in the data; the remaining 30 topics were used in the final analysis.14

Six topics (family, faith and spirituality, activities and hobbies, struggles, doing good, and community and belonging) have hierarchical relationships with other topics. For example, the activities and hobbies topic contains the anchor words for its six subtopics: hobbies, creativity, leisure, travel, outdoors and fitness. Similarly, responses are coded as having mentioned the topic family if they mention spouses and romantic partners or children and grandchildren (each of which are also coded separately), or if they make general mention of family or relatives other than spouses, children and grandchildren.

The 30 topics researchers examine in the analysis are shown below, along with indicators of the inter-rater reliability achieved in the coding of each topic.

The complete list of topics appears in Appendix A, along with each topic’s codebook definition and the final list of anchor words. It is important to note that these word lists and interpretations were developed specifically for use on this particular dataset (i.e. the unique frequency of and context in which these words were used in this particular set of survey responses) and these keywords may not produce reliable results for other text datasets.

The complete list of topics appears in Appendix A, along with each topic’s codebook definition and the final list of anchor words. It is important to note that these word lists and interpretations were developed specifically for use on this particular dataset (i.e. the unique frequency of and context in which these words were used in this particular set of survey responses) and these keywords may not produce reliable results for other text datasets.

Regression analysis

Researchers tested all the demographic correlates of having mentioned each topic using survey-weighted logistic regressions that included each of the following factors:

- Race/ethnicity

- Religion

- Education

- Age

- Income

- Political ideology (5-point scale)

- Ideological extremity (moderate vs. very liberal or very conservative)

- Gender

- Self-reported urbanicity of their place of residence (urban, rural, suburban)

- U.S. geographic region (West, Midwest, Northeast, South)

- Marital status

- Whether the respondent has children

- Survey language (English or Spanish)

- Logged word count15

By including the last variable – word count – researchers were able to identify demographic differences in the rates that certain topics were mentioned, holding constant the amount of text that a particular respondent wrote. Because of outliers, word count was transformed using a logarithmic scale to more closely approximate a normal distribution. In this report, all discussion of the open-ended responses take into account the results of these regression models. That is to say, groups are not described in this report as being different than one another (in their propensity to mention a given topic) unless the differences are statistically significant in the regression model.

The dependent variable in each of these models was whether a particular topic was mentioned by respondents. Each model was also estimated multiple times, alternating the base category of each categorical independent variable in the model across every possible value, to ensure that results were not conditional on the selection of any particular base category.

Robustness and sensitivity analysis

Because the analysis examined small demographic groups and topics that were mentioned infrequently, researchers attempted to address the possibility that observed differences were due to classification error. This kind of error emerges when keywords for a particular topic appear in responses that do not actually discuss the topic of interest. As an example, sometimes individuals mentioned that they enjoyed “working out,” which would incorrectly be classified as a reference to a job, because it contained the keyword “working.” For some of the smaller demographic groups in the sample, even a 5% or 10% false positive rate could produce misleading results.

To assess this risk, researchers examined all relationships of interest using a series of multiple regression models. In each iteration of these models, researchers introduced measurement error into the dataset and checked whether the results were comparable. All results are robust to this test, which is described in more detail below.

To create an estimate of measurement error for these tests, researchers used the results of the inter-rater reliability analysis to compute the precision (rate of false positives) and recall (rate of false negatives) for each topic. This allowed them to measure exactly how often certain keyword lists produced incorrect results. With these rates in hand, researchers conducted a sensitivity analysis in which they repeatedly reversed the topic classifications for different subsets of responses. Then, after estimating a series of regression models, they assessed the statistical significance of particular demographic differences.

Researchers used the precision score for each topic to calculate the number of responses in the full dataset that the topic flagged that could be false positives, and they used the recall score to calculate the number of responses the topic may have missed. Researchers then created 100 simulated datasets meant to approximate this measurement error. In other words, in these simulated datasets, researchers switched positive responses to negative ones, and negative ones to positive, according to the false positive or negative rates observed in the comparison with human coders.

For example, if a topic had a precision score of 94%, 6% of the positive cases it flagged could be incorrect. And, if the same topic had a 92% recall, 8% of the true positive cases could have been incorrectly flagged as negatives. By taking 6% of the positive cases for a given topic and turning them negative (and vice versa for 8% of the negative cases) and then repeating this process 100 times, researchers simulated how measurement error might affect the underlying data, based on the observed rates of classification error. Then, by re-estimating the relationship between respondent attributes and their likelihood of mentioning particular topics repeatedly across these 100 datasets, researchers were able to identify demographic differences that were or were not particularly sensitive to classification error.

For aggregated topics (like family, for which precision and recall were only known for their subtopics), precision and recall were calculated by examining all possible combinations of the subtopics, and, for each combination, picking the lowest false positive and false negative rate for that combination. These false positive and false negative rates were then aggregated using a weighted average proportional to the frequency of each subtopic combination in the dataset.

However, responses were not replaced at random. Instead, cases were inserted and removed using a weighted sampling process that favored selection of certain cases based on a ratio that researchers computed using topic co-occurrences. This process was based on the assumption that a case was more likely to be a false positive if it used different words from other positive cases, and it was more likely to be a false negative if it used similar words as positive cases. Researchers operationalized this intuition by examining how topics were related to one another; since the pattern-matching approach was far more accurate than not, researchers could measure the relationships that topics had with one another and quantify the likelihood that they would co-occur. Using this knowledge, researchers identified positive and negative cases that looked unusual and made a more informed selection of potential false positives and false negatives to replace.

For example, if a relevant word was left out of the list of words for the topic “music” and this caused the pattern matching to miss a handful of positive cases, researchers could assume that those cases would be more likely to mention “creative activities” than “spirituality,” because the “creative activities” topic co-occurred more often with the topic “music” than it did with “spirituality.” Conversely, if a word in the list of anchors happened to be used out of context in a handful of responses, and therefore was causing them to be flagged as false positives for the topic “job or career,” researchers could assume that those incorrect responses were more likely to contain topics that did not commonly co-occur with “job or career.” While the word “working” is usually about jobs, a few responses used it in a way that was unrelated to the concept of a career, in phrases such as “my relationship isn’t working out” or “I love working out at the gym.” To the extent that people who talked about their career or job were less likely to talk about relationships or fitness, researchers leveraged that information to more intelligently guess which responses may have been incorrectly flagged for “career or job.”

Put differently, if half of the responses that mentioned careers also mentioned money, researchers were more confident that responses mentioned career if they also mentioned money. And because of this relationship, researchers attempted to avoid removing conceptually related responses when testing for robustness, instead favoring responses that contained topics that did not commonly co-occur with the career topic, such as retirement.

Accordingly, when sampling cases to add as potential false negatives during each iteration of the regression model for the topic, researchers computed the ratio of responses that were classified as including the topic under examination, as well as each of the other topics. For example, if 50% of the responses that mentioned a spouse or partner also mentioned children, but only 25% of the responses that did not mention a spouse or partner mentioned children, the ratio would be 2.0. On the other hand, if 25% of the responses that mentioned spouse or partner also mentioned retirement, while 50% of the responses that did not mention a spouse or partner mentioned retirement, the ratio would be 0.5.

In this manner, researchers computed a likelihood ratio for every topic combination. For topics that had a perfect overlap with others due to a hierarchical relationship, this ratio was not computed at all; for aggregate topics, researchers used a weighted measure of precision and recall based on the frequency of all possible combinations of the constituent topics.

At each iteration of the sensitivity analysis (100 in total), potential false positives and false negatives were then selected based on these ratios. For potential false negatives – responses that did not contain the particular topic of interest – researchers examined these ratios across all of the topics that each response did contain, and set each response’s sampling weight to the maximum ratio. Therefore, responses with correlated topics were included more frequently, based on the most commonly co-occurring other topic in the response. Then, based on the appropriate number of potentially missing cases for the topic (based on the topic’s recall), additional negative cases were added according to these ratio-based sampling weights and set as positive cases.

To select potential false positives to remove, researchers used the exact opposite approach, reversing the ratios to capture the topics that were least associated with each other. Then, using these ratios as sampling weights that prioritized responses containing topics that co-occurred with the regression topic the least, a sample of responses that had been flagged as positive cases for the relevant topic were removed, and instead set as negative cases, based on the topic’s precision score.

During each of these 100 iterations, this weighted simulation procedure was used to drop likely false positives and add likely false negatives, after which the regression was re-estimated. At the end of this process, researchers averaged the results for each demographic attribute across all of the regression iterations, and confirmed that each of the relationships of interest was statistically significant at the 0.05 p-value level and unlikely to be the result of classification error or rare (but high-weight) responses. This report only examines topics that at least one demographic subgroup mentioned 5% of the time, and then only if they mentioned the topic more than 25 times total (after accounting for weights).

- While most respondents took both surveys, this analysis examines the surveys separately. ↩

- Both education and income are separately associated with whether someone mentions their job or not, and the magnitudes of the effects are similar. ↩

- This is in line with previous Pew Research Center work on the topic. ↩

- This may reflect the fact that in the U.S., whites have more wealth and report higher household income than black Americans. Indeed, in the survey with the open-ended question, among those who are in the highest income bracket, 79% are white – higher than the overall share of whites in the full sample (66%) – while 6% are black and 8% are Hispanic, lower than the overall share of these groups in the full sample. For more information on disparities in wealth and income by race, see Pew Research Center’s 2016 report, “On Views of Race and Inequality, Blacks and Whites Are Worlds Apart.” ↩

- Researchers computed new survey weights for the sample of respondents that answered the question. Because of this, missing data should not affect the representativeness of the results. ↩

- When data collection for the 2014 Political Polarization and Typology Survey began, non-internet users were subsampled at a rate of 25%, but a decision was made shortly thereafter to invite all non-internet users to join. In total, 83% of non-internet users were invited to join the panel. ↩

- Respondents to the 2014 Political Polarization and Typology Survey who indicated that they are internet users but refused to provide an email address were initially permitted to participate in the American Trends Panel by mail, but were no longer permitted to join the panel after Feb. 6, 2014. Internet users from the 2015 Pew Research Center Survey on Government who refused to provide an email address were not permitted to join the panel. ↩

- White, non-Hispanic college graduates were subsampled at a rate of 50%. ↩

- Approximately once per year, panelists who have not participated in multiple consecutive waves are removed from the panel. These cases are counted in the denominator of cumulative response rates. ↩

- Researchers started with a much larger number of potential topics before narrowing the total to 30, a process described in more detail below. ↩

- These models are described in more detail on Pew Research Center’s Decoded blog. ↩

- The model with 110 topics included trigrams, while the model with 55 topics used only unigrams and bigrams. ↩

- In other words: If, for a particular topic, researchers could expect a kappa of 0.85 based on the random sample in which the topic had occurred 5% of the time, and it was assumed that the reliability of 0.85 would hold for an additional sample, and it was expected that the topic would occur in the new sample roughly 50% of the time (instead of 5%) because of the keyword oversampling, then researchers could use this information to estimate the new sample size required to reduce the uncertainty and narrow the confidence interval enough to verify that the kappa was at least above 0.70 with 95% confidence. In this case, a sample size of 63 would be required using keyword oversampling, compared with a sample size of 316 required using random sampling. ↩

- The six topics that were excluded from the analysis due to low frequency were: TV/movies, reading, sports, music, volunteering, and politics. ↩

- Researchers noticed that some topics were more likely to be mentioned in longer responses, potentially due to the salience of particular topics (that is, it is possible that most respondents initially thought of “family,” but the topic of “pets” only occurred to the subset of respondents that wrote longer, and perhaps more considered, responses). Furthermore, certain demographic groups appeared to systematically write more than others. Since researchers wished to examine differences in the frequencies of particular topics across demographic groups, this presented potential confounds above and beyond the already confounded relationships between overlapping demographic groups (such as those with high levels of education and high incomes). ↩

No comments:

Post a Comment